This editorial is published in the BISE Journal Vol. 61 Issue 4.

Christof Weinhardt, Wil van der Aalst, Oliver Hinz

Why we are introducing Registered Reports

Last February, the BISE Editorial Board met for its annual Editorial Board Meeting in Siegen with a bunch of new and interesting ideas on the agenda. One topic that was discussed and settled was the introduction of a new submission format – Registered Reports – for the recently established “Human-Computer Interaction and Social Computing” department. Experimental research is the dominant paradigm in this department and therefore a good candidate for this innovative approach to evaluating research results. Registered Reports are a promising format to encourage and support high quality (and also risky and innovative) experimental research and ensure rigorous scientific practice. As experimental research is gaining importance in our journal through our new department, but also in other areas such as Economics of IS, dealing with Registered Reports is also part of our strategy to maintain and develop our status as a high-quality community journal and make it even more attractive for potential authors in the respective areas.

Within the last decade, the scientific landscape was shaken by a series of reports showing that many scientific studies are difficult or impossible to replicate or reproduce in a subsequent investigation, either by independent researchers or by the original researchers themselves. This problem is known as the “Replication Crisis”. Though the focus was on psychology, biology and medicine, our domain – Information Systems – and related fields are no exception (Coiera et al. 2018; Head et al. 2015; Hutson 2018). Several questionable scientific practices – unfortunately so common that almost known by everyone – are more or less related to this problem (Chambers 2015; Chambers et al. 2014). One is a set of methods called “p-hacking”. A common metaphorical paraphrase of p-hacking, somehow funny and sad at the same time, is “torturing the data until they confess” (e.g., Probst and Hagger 2015). P-hacking means, e.g., introducing or removing control variables or switching statistical tests in order to receive significant p-values. This is often combined with underpowered study design, the observation number of which is iteratively increased until the results match the expectations (very likely only due to variance). HARKing is another questionable method which consists in adapting hypotheses to the data after a study is performed. Simpson’s paradox is the well-known phenomenon that a trend may appear in several different groups of data but disappears or changes to the contrary when these groups are combined. This illustrates that seemingly conflicting conclusions are possible for a given data set. These phenomena lead to the situation that a considerable amount of published findings are, in fact, false positives. Finally, a lack of data sharing makes many results not verifiable.

Although almost everyone agrees that the quality, the relevance, and the importance of a research project is not determined by the mere fact that all results are significant, the truth is that there is a publication bias towards research that reports positive results, speaking in terms of p-values (Chambers et al. 2014). As a result, a researcher’s investment only pays off if the results are significant, making methods like p-hacking and HARKing attractive in borderline cases. In addition, riskier research, though based on a profound theoretical base, is less attractive and not as likely to be published.

Registered Reports are one possibility to address most of these issues. Right now, 168 journals are offering Registered Reports from the fields Psychology, Medicine, Pharmaceutics, Biology, Neuroscience, Economics, Political Science, Education – however, no Information Systems journal is among them (Center For Open Science 2019). A quick look into the list of references of the current editorial below (by the way, most of these are editorials) confirms this impression. Registered Reports are not yet applied in Information Systems journals; however, their potential makes them worthwhile to be discussed and tested. This is the reason why we decided to adopt this novel evaluation approach in our new department “Human-Computer Interaction and Social Computing” for the upcoming two years.

How Registered Reports Work

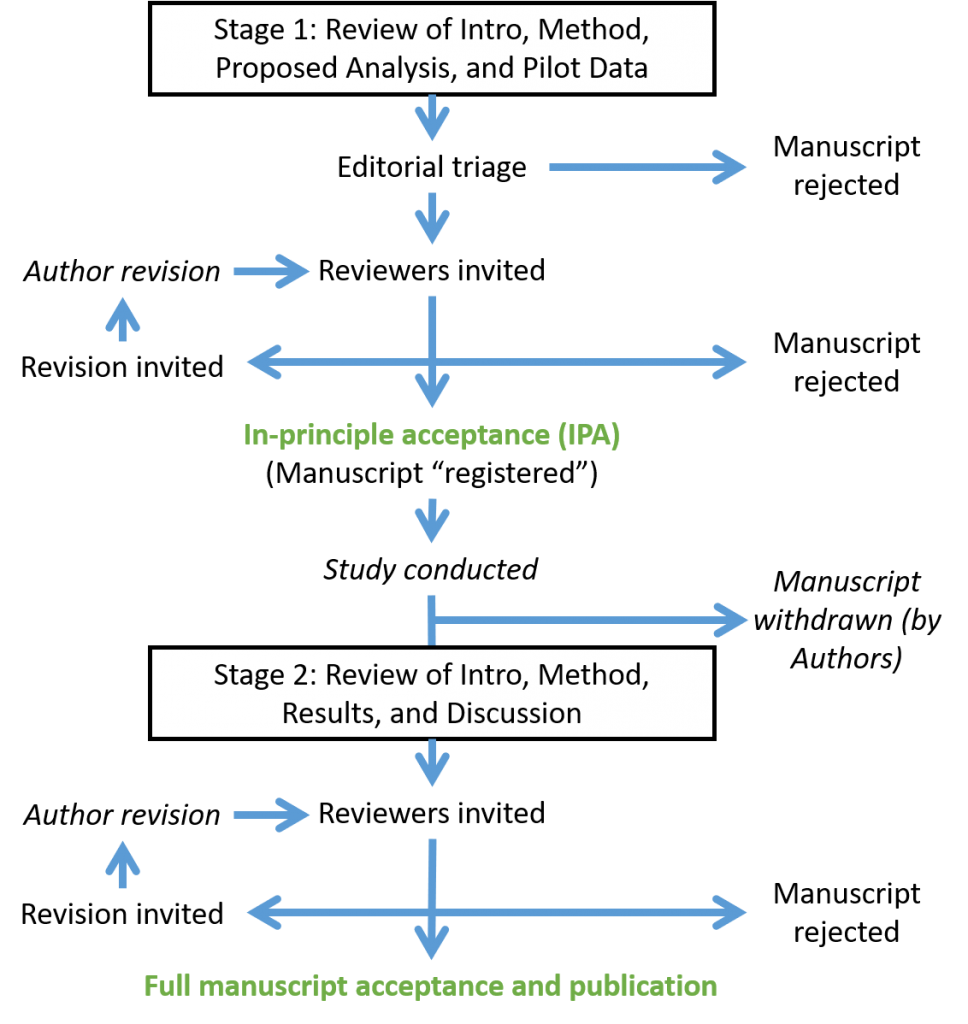

Figure 1 illustrates the process of how Registered Reports work. In stage 1, a study protocol is submitted that is basically an empirical article including an introduction, related work, hypothesis development and the research model, as well as methodology including research design and proposed analysis procedure. Optionally, it can comprise pilot data. The submission is reviewed guided by the following questions (adapted from Probst and Hagger 2015):

- The significance of the research question(s) and the potential contribution of findings to our discipline.

- The logic, rationale, and plausibility of the proposed hypotheses.

- The rigor of the proposed methodology and statistical analysis including a power analysis.

- The extent to which the methodology is sufficiently clear for an independent investigator to be able to replicate the procedures and analysis.

Stage 1 is a full review process. Its outcome can basically be every outcome that is common for a standard submission: Reject, Revise or (in-principal) Accept. An “in-principal acceptance (IPA)” means that the study is published in case that the study is performed exactly as proposed (also including the proposed statistical evaluation). Stage 1 is intended to find all weak points in the theory, study design, and proposed evaluation before it is too late (and potentially very expensive). As soon as all reviewers agree that the methodology adheres to rigor standards and the results are expected to be (incontestable) valid, the study can be performed. At this point in time, the abstract of the study protocol will be published under the authors’ name on our BISE website. First, this step prevents the original idea for the study to be pirated by any other party, and second, it ensures that the authors do not (mis)use the Registered Report as a vehicle for feedback in order to prepare their study for any other outlet.

According to the main idea of Registered Reports, the hypotheses cannot be changed after the in-principle acceptance. This does not mean that alternative ones are not allowed to be proposed in the case that the initial hypotheses could not be confirmed. In Stage 2, the full paper undergoes a second peer review process, checking if the study protocol was implemented and if the reasons for potential changes were comprehensible (for guiding questions see Chambers et al. 2014). Nevertheless, a rejection is still possible, namely if the study’s execution and analysis diverged too much from the proposed study design. The usual outcome, however, should be an acceptance decision. The refinement of the discussion and conclusion section may still require one or two review rounds, but they are likely to be rather fast.

Advantages and Critical Comments

The obvious benefits of Registered Reports are the cutback on questionable research practices, emphasizing the importance of good research practice on a profound theoretical base and improving the reproducibility of research – but there are more:

Registered Reports can make researchers more sensitive for the meaning of p-value. There is a need for a clear distinction between confirmatory analysis, where the p-value is actually meaningful, and explanatory analysis, where p-values lose their meaning due to the unknown inflation with respect to the alpha-level (Nosek and Lakens 2014). Nevertheless, this does not mean that Registered Reports can only be used for confirmatory studies. They can also handle data from pilot studies in the initial submission and thereby even allow exploratory and risky research to be backed with a highly rigorous scientific process and peer validation (Jonas and Cesario 2016). In addition, the concern of some scientists that Registered Reports may restrict creativity is also not really substantial, as an accepted study design does not hinder the researchers to report further interesting findings, as long as they do so so in a transparent way (Chambers 2015).

A further point is that Registered Reports may give confidence to (young) researchers whether the research question and design are perceived as sufficiently rigorous and relevant by peers (Sarnecka and Barbara 2018). This may encourage researchers to submit to our journal. However, Registered Reports should not become an instrument for inexperienced researchers to “improve” their study protocols by requiring high-quality feedback. It is definitely important to make sure that BISE reviewers do not only try to improve the study protocol, but also stringently reject weak submissions.

It is an open question whether adopting Registered Reports results is more of an effort for the author or reviewer. Bloomfield et al. (2018) analyzed papers submitted to a conference and concludes that the upfront ‘investment’ of the author(s) is increased, follow-up investment reduced. However, the overall effort to perform experiments seemed to exceed the typical level of effort in this (accounting) outlet. Also, the interviews conducted with authors and reviewers afterwards raised very interesting considerations, which, however go beyond the scope of an editorial. There is no reliable data if the workload for reviewers increases or decreases. The additional stage in the publication process may suggest an increased workload. It is, however, also possible that this significantly shortens the process of the second round (as high-quality Information Systems journals often perform 2 or more rounds anyhow). In addition, one can see this from a global perspective. Faulty experiments are often submitted and rejected in several attempts in several outlets, passing through numerous reviewers’ hands (Chambers et al. 2014). This is very unlikely to happen for Registered Reports, meaning that the overall workload is reduced for reviewers, although the individual reviewer may not feel this.

On top of this, the reviewers’ level of satisfaction might be much higher. They may tend to take the chance in a Registered Report to really impact the paper instead of “just” giving feedback on a paper after things have already gone “wrong” and cannot be changed anymore. Although this may increase their motivation (Jones 2018), it may have the negative consequence that reviewers put great efforts into the paper without any reward. Our gut feeling is that both effects are very likely to occur. The missing reward may be addressed by introducing concepts of “Open Peer Review”, which currently is an issue for the BISE Editorial Board to discuss with regard to its possible implementation in the future.

An argument often brought up against Registered Reports addresses its high requirements regarding statistical power (e.g., of usually 90%). This may be a problem for a journal if there is the possibility to publish a statistically underpowered study in another journal with comparable reputation (Pain 2015). However, we do not see this in our community yet. Nevertheless, the methodological spectrum of our field is growing and we are starting to adapt fMRI studies or other experiments using expensive equipment that may have this problem of being chronically underpowered due to financial restrictions. However, instead of scaring off these researchers, we may provide them with a platform to advertise their research and to take other research groups on board in order to match power requirements. An in-principle accepted manuscript may lower the scepticism to join a risky and expensive project.

Besides the power problem, the field of research as well as the community and its culture play a major role in introducing such a submission format. In strong empirical fields, Registered Reports can help substantially to maintain a high level of quality for our journal. We are excited to experience its impact within our Human-Computer Interaction and Social Computing department.

It was discussed in our Editorial Board meeting that the time taken to complete the initial review process (Stage 1) can be a critical variable since the authors submitting the study protocol are probably just waiting in the wings. Long delays may negatively impact the reception of the format. We will keep this in mind and will do our best to accelerate the process to provide feedback as soon as possible.

To change the angle of view from the manuscript to the outlet, it is also important to see what Registered Reports do to our journal. They are likely to reduce publication bias (towards positive results) and subsequently lead to more negative results published. It was brought up in the Editorial Board Meeting that such papers are less likely to be cited. They do not actually provide a finding, as the null hypothesis significance testing does not allow to conclude that a non-significant result offers direct support for the null hypothesis. In fact, we have no experience available to estimate this effect and how it levels up other positive effects for citations resulting from offering Registered Reports (popularity, perceived innovativeness, credibility). It is likely that Registered Reports can still improve the research’s quality as well as its impact and subsequently also the overall quality of our journal.

Certainly, there may be some other issues to discuss with respect to Registered Reports. At this point, I would like to refer interested readers to Chambers et al. (2014) where the authors discuss 25 more or less valid critics on the format.

It is not yet clear whether Registered Reports will become a gold standard for experimental research in Information Systems research. We decided to take this step forward and give the format the opportunity to prove itself and its acceptance by our community. In doing so, the BISE journal will also further push the boundaries of our discipline and ensure the credibility of the research results.

Next Steps

Registered reports are not a panacea and do not solve all problems, but they are a first step into the right direction and towards a credible and transparent research process. Thus, it is about credibility – and about how our community is prepared to move research forward together in a healthy mix of cooperation and competition. We will share our experiences in two years and are already looking forward to that.

We are more than happy to hear your opinion and your ideas on this topic. This editorial is also available on our webpage, and there we provide the possibility to add comments at the end of the document. We really encourage you to join an interesting and constructive discussion under https://www.bise-journal.com/registered_reports – Thank you!

References

Bloomfield R, Rennekamp K, Steenhoven B (2018) No System Is Perfect: Understanding How Registration-Based Editorial Processes Affect Reproducibility and Investment in Research Quality. Journal of Accounting Research 56(2):313–362. https://doi.org/10.1111/1475-679X.12208

Center for Open Science (2019) Center for Open Science – Registered Reports. https://cos.io/rr/. Accessed 12 March 2019

Chambers BC (2015) Elsevier editors’ update – Cortex’s Registered Reports: how Cortex’s Registered Reports initiative is making reform a reality. https://www.elsevier.com/editors-update/story/peer-review/cortexs-registered-reports. Accessed 12 March 2019

Chambers CD, Feredoes E, Muthukumaraswamy SD, Etchells PJ (2014) Instead of “playing the game” it is time to change the rules: Registered Reports at AIMS Neuroscience and beyond. AIMS Neurosci 1(1):4–17. https://doi.org/10.3934/Neuroscience2014.1.4

Coiera E, Ammenwerth E, Georgiou A, Magrabi F (2018) Does health informatics have a replication crisis? J Am Med Inform Assoc 25(8):963–968. https://doi.org/10.1093/jamia/ocy028

Head ML, Holman L, Lanfear R, Kahn AT, Jennions MD (2015) The extent and consequences of P-Hacking in science. PLoS Biol 13(3):1–15. https://doi.org/10.1371/journal.pbio.1002106

Hutson M (2018) Artificial intelligence faces reproducibility crisis. Sci 359(6377):725–726. https://doi.org/10.1126/science.359.6377.725

Jonas KJ, Cesario J (2016) How can preregistration contribute to research in our field? Compr Results Soc Psychol 1(1–3):1–7. https://doi.org/10.1080/23743603.2015.1070611

Jones J (2018) The Wiley Network – Registered Reports: good for both science and authors. https://www.wiley.com/network/researchers/being-a-peer-reviewer/registered-reports-good-for-both-science-and-authors. Accessed 15 March 2019

Nosek BA, Lakens D (2014) Registered Reports. Soc Psychol 45(3):137–141. https://doi.org/10.1027/1864-9335/a000192

Pain E (2015) Sciencemag.org – Register your study as a new publication option. https://doi.org/10.1126/science.caredit.a1500282

Probst TM, Hagger MS (2015) Advancing the rigour and integrity of our science: the Registered Reports initiative. Stress Health 31(3):177–179. https://doi.org/10.1002/smi.2645

Sarnecka BW, Barbara W (2018) Sarnecka Lab Blog – On writing and life in a cognitive science lab. https://sarneckalab.blogspot.com/2018/08/chapter-7-imrad-hourglass-of-empirical.html. Accessed 14 March 2019